Anyone can automate end-to-end tests!

Our AI Test Agent enables anyone who can read and write English to become an automation engineer in less than an hour.

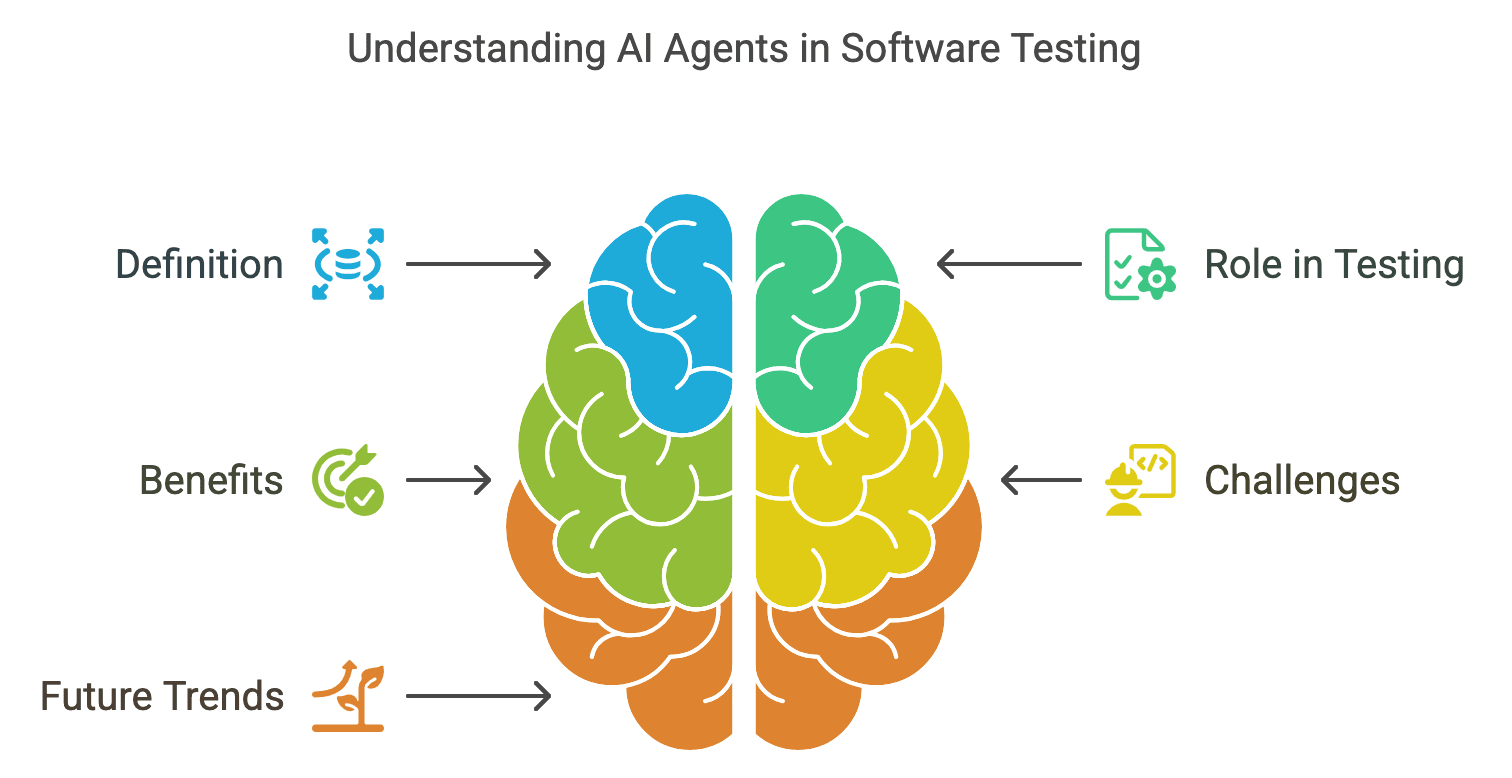

Artificial Intelligence is changing everything around us. It’s so powerful that it’s becoming a part of our everyday lives. Right now, AI agents in software testing are getting a lot of attention because of what they can do and the big impact they’re making.

But what exactly is an AI agent, and what does it do? Think of it as a smart assistant that understands its surroundings and makes decisions on its own to achieve a goal. Once given a task, these agents can think and act independently.

AI agents can be simple, like controlling the temperature in a room, or advanced, like humanoid robots or Mars rovers. Their use is growing rapidly—by 2028, an estimated 33% of enterprise software applications will incorporate agentic AI, a massive jump from less than 1% in 2024.

Businesses are already seeing the benefits. Around 90% of companies say generative AI agents have improved their workflows, making processes smoother and more efficient. In software development, AI agents are proving to be game changers, helping programmers complete tasks 126% faster.

In this article, we’ll explore how AI agents are being used in software testing, their key features, different types, and how they’re changing the way testing is done.

AI agents are playing a bigger role in software testing, improving efficiency, accuracy, and speed. They help teams by handling repetitive tasks, analyzing large amounts of data, and even identifying potential issues before they occur. This makes it easier for testers to concentrate on more challenging work while ensuring better software quality.

AI agents in software testing are smart systems that use machine learning, natural language processing, and other AI-based technologies to handle testing tasks. These agents can work on their own or support human testers in different ways.

AI agents can take over repetitive testing tasks like regression testing, which helps save time and reduces human mistakes. This speeds up software releases and allows teams to focus on more important tasks.

They can process large amounts of data to spot patterns and issues, helping testers concentrate on problem areas. This focused testing improves software quality and increases efficiency.

By using past data, AI agents can forecast possible defects or failures, helping teams fix problems before they happen. Catching risks early can save businesses a lot of money on fixing bugs later.

AI agents can create test cases automatically based on application requirements such as test cases for registration page, shopping cart and much more. This ensures thorough testing and reduces the chances of missing any important features.

These agents get better with each testing cycle by learning from past results. This ongoing improvement helps maintain software quality and keeps users satisfied.

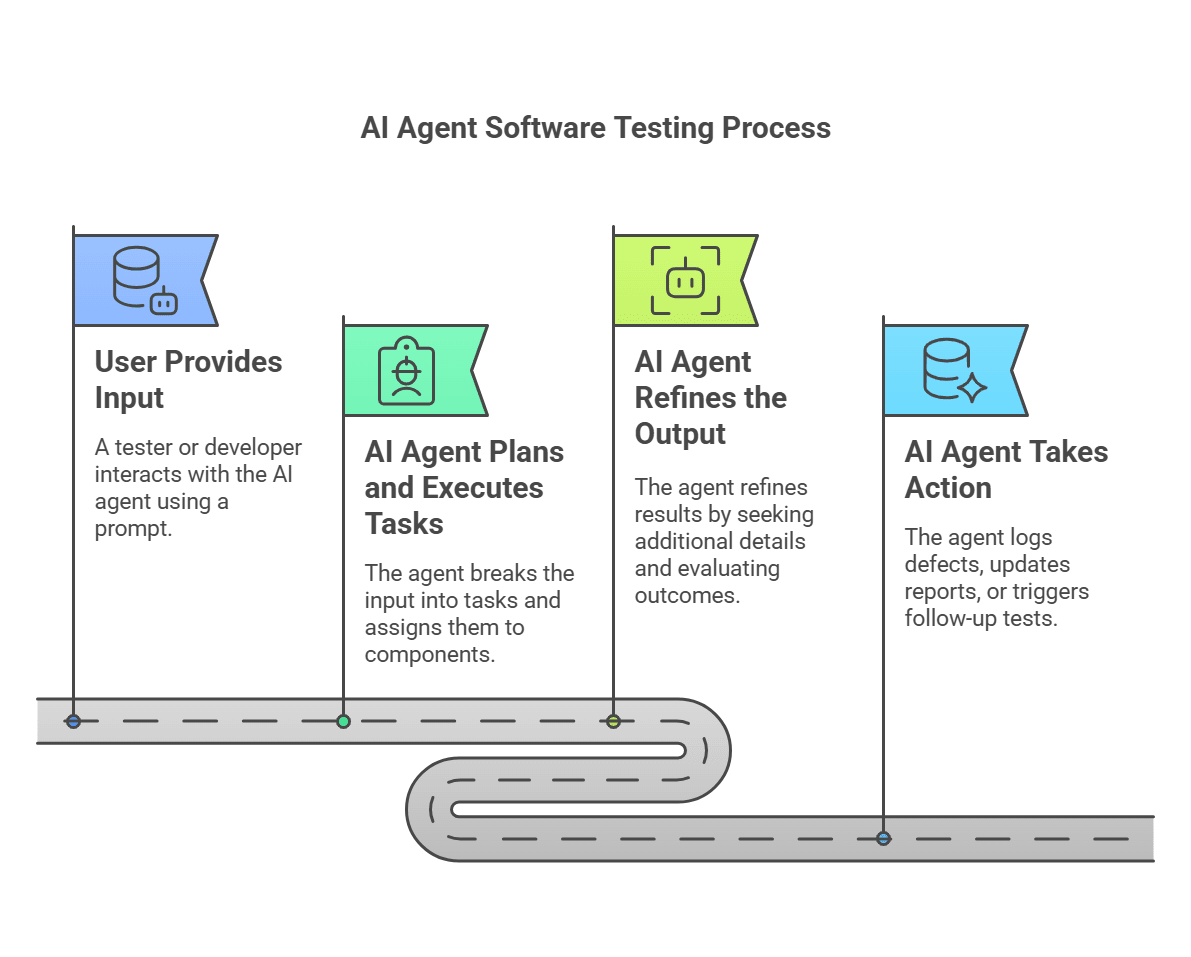

AI agents can handle complex software testing tasks by automating workflows, analyzing test results, and ensuring software quality with minimal manual effort. These agents follow a structured approach to complete testing processes efficiently.

A tester or developer interacts with the AI agent using a simple prompt, such as requesting a test case execution, debugging insights, or performance analysis. If needed, the agent asks for clarification to refine the request.

The agent processes the input, breaks it into smaller tasks, and assigns them to specialized components. These components use test data, automation scripts, and past learnings to carry out the required actions. They also interact with different testing tools and environments to perform the tasks.

As the process runs, the agent may request additional details from the user to improve accuracy. It continuously evaluates the results, adjusting as needed, and presents refined findings to the user.

Once testing is complete, the agent can execute necessary actions, such as logging defects, updating reports, or triggering follow-up tests. The goal is to deliver reliable results with minimal intervention.

Here are different category of AI agents and how they can be implemented on software testing:

Machine learning (ML) AI agents enhance software testing by learning from test data, predicting failures, optimizing test execution, and improving overall software quality. These agents adapt based on patterns in test results, user interactions, and application behavior, making testing smarter and more efficient.

ML models analyze historical defects, code changes, and user behavior to generate high-impact test cases.

Prioritizes tests based on risk assessment, ensuring critical areas are tested first.

Example: AI-driven test case generation tools that focus on frequently failing components.

Identifies changes in UI elements and updates test scripts automatically.

Reduces test maintenance efforts when applications undergo UI modifications.

Example: AI-powered Selenium-based frameworks that auto-fix broken test scripts.

Uses ML models to predict areas in the code that are likely to fail.

Analyzes past test failures to determine the root cause and suggest fixes.

Example: AI-driven bug prediction systems that highlight potential problem areas in software.

Generates synthetic test data based on real-world patterns.

Ensures data diversity for better test coverage without using sensitive production data.

Example: AI-driven data masking and synthetic test data generation tools.

Model-based reflex agents are a more advanced type of AI agent that can handle partially observable environments in software testing. Unlike simple reflex agents, which rely only on immediate inputs, model-based agents maintain an internal representation of the system under test (SUT) to infer missing or unobserved information.

A model-based reflex agent:

Collects real-time inputs from the application or test environment.

Maintains a structured understanding of how the system behaves over time.

Uses past observations to determine the current system state even if some details are missing.

Makes testing decisions based on both the current input and its model of the system.

Example: If an element is missing, the agent checks whether a UI change has occurred (e.g., a menu now collapses instead of being always visible). Instead of failing immediately, it adapts based on the expected behavior.

Example: In a multi-step form, the agent tracks previous inputs to verify that later steps behave as expected, even if the system doesn’t explicitly show them.

Example: If an API call fails due to network latency, the agent can infer whether retrying would resolve the issue rather than marking the test as failed immediately.

Example: The agent tracks the structure of a web page and detects whether an element has moved instead of assuming it was removed.

A model-based reflex agent:

Collects real-time inputs from the application or test environment.

Maintains a structured understanding of how the system behaves over time.

Uses past observations to determine the current system state even if some details are missing.

Makes testing decisions based on both the current input and its model of the system.

Goal-based agents are designed to achieve specific objectives by planning and executing a sequence of actions. Unlike reflex agents, which react based on predefined rules or internal models, goal-based agents evaluate different possibilities, predict outcomes, and determine the best course of action to reach a desired state in software testing.

The agent is given a testing objective, such as finding critical defects, ensuring UI responsiveness, or achieving test coverage.

The agent evaluates different test paths or scenarios to determine which actions will help meet the goal.

Uses search algorithms, AI-driven strategies, or optimization techniques to identify the most efficient test execution plan.

Runs tests, analyzes results, and adapts actions to stay aligned with the goal.

Example: The agent receives a goal to maximize test coverage with minimal test execution time. It selects only the most relevant test cases, eliminating redundant ones.

Example: The agent's goal is to uncover critical defects. It explores different user interactions, edge cases, and error-prone paths to increase the chances of finding bugs.

A learning agent is an AI system that continuously improves its testing capabilities by learning from past experiences, feedback, and real-world interactions. Unlike rule-based or reflex agents, learning agents do not solely rely on predefined rules. Instead, they adapt and refine their approach to testing based on patterns, test results, and application changes.

These agents use machine learning, reinforcement learning, and neural networks to enhance test automation, optimize test coverage, and detect defects more efficiently.

Use Case: Detects UI changes (e.g., modified element IDs, changed layouts) and automatically updates test scripts instead of failing tests.

Example: A Selenium-based test fails because a button's selector changed, but the learning agent updates the script by recognizing similar patterns.

Use Case: Learns from previous test execution data to prioritize high-impact test cases, ensuring critical defects are caught early.

Example: Before each release, the agent runs the most failure-prone test cases first, reducing test cycle time.

Use Case: Analyzes historical test failures and code changes to predict areas where defects are likely to occur.

Example: The agent suggests additional test cases for newly modified code sections based on past bug patterns.

Use Case: Creates realistic and diverse test data by learning from production data, improving test accuracy and coverage.

Example: Instead of using static test data, the agent generates dynamic test inputs based on real-world user behavior.

A Multi-Agent System (MAS) consists of multiple autonomous AI agents working together in a shared test environment. These agents can operate independently or collaboratively to complete testing tasks more efficiently. Unlike single AI agents, MAS distributes testing workloads, optimizes execution, and adapts to complex test scenarios dynamically.

Use Case: Parallel execution of test cases across different environments and configurations.

Use Case: Ensuring consistent user experience across different browsers and devices.

Example: One agent tests on Chrome, another on Safari, while a third verifies mobile responsiveness—all running simultaneously.

Use Case: Simulating thousands of concurrent users to analyze system behavior under load.

Example: Multiple agents send simulated traffic to a web application, replicating real-world usage patterns to identify bottlenecks.

Use Case: Uncovering unexpected defects by autonomously navigating the application.

Example: Different agents interact with the UI, API, and database, uncovering errors that structured test cases might miss.

Natural Language Processing (NLP) agents use language-based algorithms to understand and generate human language. In software testing, these agents can make test case generation more efficient by:

NLP agents analyze software requirements written in plain language and generate test cases automatically, reducing manual effort. This allows teams to focus on other critical tasks.

By interpreting user stories and requirements, NLP agents help ensure that test cases match user expectations, making collaboration between technical and non-technical teams smoother.

NLP agents transform high-level test descriptions into executable scripts, speeding up testing and reducing the need for coding expertise.

When software requirements change, NLP agents update existing test cases automatically, ensuring they stay relevant to the latest version of the software.

At Rapid Innovation, we see the impact computer vision agents can have on UI/UX testing. These agents use image processing and machine learning to analyze visual elements in applications, helping ensure a smooth user experience and high-quality interface.

Our agents identify differences between expected and actual UI layouts, quickly spotting issues like misaligned elements, color variations, and font inconsistencies. This automation speeds up testing and improves accuracy, leading to a refined final product.

By tracking how users interact with UI elements, our agents review click patterns, hover effects, and scrolling behavior. These insights help improve usability and guide design decisions that enhance user experience.

We ensure UI components meet accessibility standards, making visual elements clear and usable for people with disabilities. This approach expands your user reach and aligns with modern application development standards.

AI agents are changing how software testing works, making the process faster and easier. Here are three key benefits they bring:

You don’t need coding skills to build and manage test scripts. AI agents let anyone on the team write tests in simple, plain English.

These AI-powered tools can run manual test cases on their own, saving time and effort.

Since tests are written in natural language, they don’t depend on technical details. This means fewer updates and fixes are needed over time.

Now, let’s dive into how AI agents are being used in software testing.

AI native testing tools can automatically create test cases based on your software's requirements. They do this using the following capabilities:

AI can understand what you want to test just by reading a simple description. It doesn’t just process the text—it actually executes the steps.

For example, if you’re testing an e-commerce website, you might give the AI a prompt like:

“Find and add a Kindle to the shopping cart.”

The AI will then follow those steps, just like a real user:

1. Searching for “Kindle”

2. Clicking on a specific product

3. Adding it to the cart

Tools like BotGauge let you create test cases using plain language. You don’t need to write complex scripts—just describe what you want to happen.

For example:

1. Find and select a Kindle

2. Add it to the shopping cart

3. Go to the cart

4. Check that the page includes the word “Kindle”

This makes test automation faster, easier, and more accessible, even for teams without coding experience.

AI testing runs test cases automatically without needing any manual effort. As soon as code changes are pushed to the repository, test runs start on their own. These AI agents can also integrate with defect management systems, automatically logging bugs and sharing reports with the team. Since they can run tests 24/7 and in parallel, teams get broader test coverage without lifting a finger.

AI-driven tools like BotGauge can update test scripts on their own when there are changes to the app’s UI or API. This means less time spent fixing broken scripts and more reliable automated testing.

For example, if a login button changes from a button tag to an a tag, BotGauge won’t just break—it will recognize the change and still interact with the button correctly.

AI helps shift testing earlier in development, so issues are caught before they become bigger problems. It gives developers real-time feedback, suggests which tests to run, and even spots potential bugs while they code. With AI-driven DevTestOps and TestOps, teams can scale testing and deliver high-quality releases faster.

AI-powered testing, especially using machine learning and computer vision, can automatically spot visual differences in an app’s UI across different devices and screen sizes. By comparing screenshots with a baseline, you can quickly catch visual bugs in web and mobile applications—issues that traditional functional testing might miss.

Instead of manually checking each screen, you can save a sample screenshot as a reference and compare new ones against it using this command:compare screen to stored value "Saved Screenshot"

AI can get smarter over time by learning from past test results, making testing more accurate and reducing manual work. It can also analyze trends from previous test cycles to predict potential issues, helping teams address problems before they escalate.

AI is making software testing faster, smarter, and more reliable. Here are some real-life examples of how big companies are using AI-powered testing to improve their products:

Facebook built an AI tool called Sapienz to find and fix bugs before they cause problems. Using machine learning, Sapienz analyzes code changes and automatically generates test cases to catch potential issues.

It speeds up testing by running thousands of test cases in just a few hours—something that would take human testers much longer. This helps keep Facebook’s mobile apps stable, even with frequent updates.

Google uses AI-driven tools like Android VTS (Vendor Test Suite) to test Android apps across different devices. These tools automatically create test cases and detect bugs, reducing manual effort.

AI helps ensure Android apps work smoothly on a wide range of devices, improving compatibility and performance.

Microsoft uses AI to study past test results, system requirements, and user behavior to create better test cases. This allows them to check how Windows performs across different versions and hardware setups.

AI testing helps Microsoft release more reliable updates, catching issues faster and reducing bugs in new Windows versions.

IBM uses AI to analyze logs, past defects, and customer data to create optimized test cases for cloud services. This helps uncover hidden issues that traditional testing might miss.

AI-driven testing makes IBM’s cloud services more reliable by improving test coverage and efficiency.

BotGauge is redefining test automation with the power of Generative AI, making software testing smarter, faster, and more reliable. Traditional testing is slow and often misses critical issues, but BotGauge takes things to the next level. It doesn’t just automate tests—it intelligently generates comprehensive test cases, predicts potential failures, and adapts as your software evolves. The result? Better coverage, fewer surprises, and a smoother development process.

Instead of just automating repetitive tasks, BotGauge dynamically creates test cases based on code changes and user behavior, cutting down on manual effort.

Using predictive analytics, BotGauge pinpoints areas likely to fail, so teams can address issues before they become major problems.

No need for complex scripts—just describe the test in plain English, and BotGauge takes care of the rest. Even non-technical team members can create thorough test scenarios effortlessly.

Whether you're working on a small app or a massive enterprise system, BotGauge keeps up. It automates testing in real time, ensuring rapid iterations without compromising quality.

AI agents in software testing such as BotGauge are changing the game in software testing by automating repetitive tasks, expanding test coverage, and quickly identifying defects—all in plain English. With the power of generative AI and natural language processing, these agents streamline the testing process, making it faster and more efficient.

As AI continues to advance, these agents will become even smarter, taking software testing to the next level. We’re only at the beginning of this shift, but it’s already laying the foundation for higher-quality software, faster releases, and less manual effort. The future of testing with AI looks bright, and it’s only getting better from here.

AI in software testing helps automate tasks like test execution, data validation, and error detection. It improves efficiency, expands test coverage, and reduces human effort, making the process faster and more reliable.

An AI tester is a tool or system that uses artificial intelligence to automate and optimize software testing. It can analyze test cases, detect patterns, and execute tests with minimal human intervention, improving accuracy and speed.

AI will make software testing smarter by automating repetitive tasks, predicting defects, and improving test coverage. It will reduce manual effort, speed up releases, and enhance software quality, making testing more efficient and scalable.

Written by

SREEPAD KRISHNAN

With 10+ years of experience, Sreepad has worked across Customer Success, Professional Services, Tech Support, Escalations, Engineering, QA, and Software Testing. He has collaborated with customers, vendors, and partners to deliver impactful solutions. Passionate about bridging technology and customer success, he excels in test strategy, automation, performance testing, and ensuring software quality.

Our AI Test Agent enables anyone who can read and write English to become an automation engineer in less than an hour.